Technical Tuesday: getting your AI agents enterprise ready with agent evaluation and scoring

Share at:

AI agents are rapidly rising in popularity. Deloitte predicts that 50% of enterprises will be using these reasoning, action-taking AI entities by 2027. AI agents are being embedded in enterprise processes, executing critical tasks that require critical thinking, adaptability, and action.

As interest grows, new AI agent integrated development environments (IDEs) are flooding the market. These promise enterprises the capabilities to quickly and reliably build custom AI agents that are tailored to exact business requirements. However, business leaders need to ask themselves:

What’s unique about these build experiences?

What’s my fastest path to success?

How do I know when my agent is ready for production?

In this blog, I’ll show why an evaluation-driven development philosophy is crucial for getting AI agents into production. I’ll also explain some of the principles of agent scoring and how leading enterprises are using this approach to build trusted, enterprise-grade AI agents.

What is agent evaluation?

So what exactly is agent evaluation, and how is it different from traditional software development testing?

When it comes to gauging the performance of AI agents, unit and integration testing won’t cut it. Every developer needs a way to objectively measure the output and plan (or trajectory) at both build and runtime. They also need the means to compare the two.

Unlike traditional methods, agent evaluation is an objective assessment of an agent’s performance based on a baseline set of data. It's a systematic approach to measure how well AI agents perform against defined business objectives or user expectations.

Multi-attribute scoring is a core step in building confidence towards and enjoying rapid success from deploying agents into real-world production environments. The scoring criteria could be:

Quality of output: the accuracy of the information the agent provides, its structure, tone and style.

Appropriate and effective tool use: following tool descriptions, business policies, guardrails, and even the tool output itself. For example, measuring the accuracy of data retrieved by a retrieval augmented generation (RAG) service.

Agent planning and task completion: did the agent execute its plan based on how you wanted it to?

| Traditional Testing | Evaluation |

|---|---|---|

Focus | Does the system function correctly? | Agent evaluation focuses on performance, quality, and user experience. It measures how well the agent completed its task and is graded against a ground-truth dataset. |

Methodology | Testing often uses a binary pass/fail criteria. | Agent evaluation uses nuanced evaluation frameworks with multiple criteria and graduated scales for performance. |

Timing | Testing occurs (primarily) before deployment. | Agent evaluation is done throughout the agent's full lifecycle. |

The benefits of agent evaluation

The agent evaluation approach directly translates technical evaluations into business-focused insights that can be used to continuously improve AI agents and, in turn, the performance and impact of agentic automations. Agent evaluation enables leaders to make informed decisions on:

When an agent is ready for production

Which aspects need further refinement

How to prioritize improvement efforts

Whether an agent meets the organization's quality standards

Businesses can also ensure their AI investments deliver maximum value while minimizing implementation risks:

Risk mitigation: identify potential issues from pre-deployment to production.

Resource optimization: focus improvement efforts on the most critical areas.

Quality assurance: ensure agents meet minimum performance thresholds.

Continuous improvement: track progress over time through consistent metrics.

Stakeholder confidence: provide transparent reporting on agent readiness.

Agent evaluation has clear advantages over traditional testing approaches. Yet, enterprises can benefit even further when their evaluation criteria are pre-defined, and built into a structured framework and user experience.

Agent scoring

Many aspects must be considered to create a production-ready AI agent. You need to craft a well-structured prompt for instructing the agent. You need to choose the right tools for it to complete its tasks, and then perform sufficient evaluation for the diverse set of scenarios it may encounter. Ultimately, you might ask: how do I know if I’m on the right track to success with my agent?

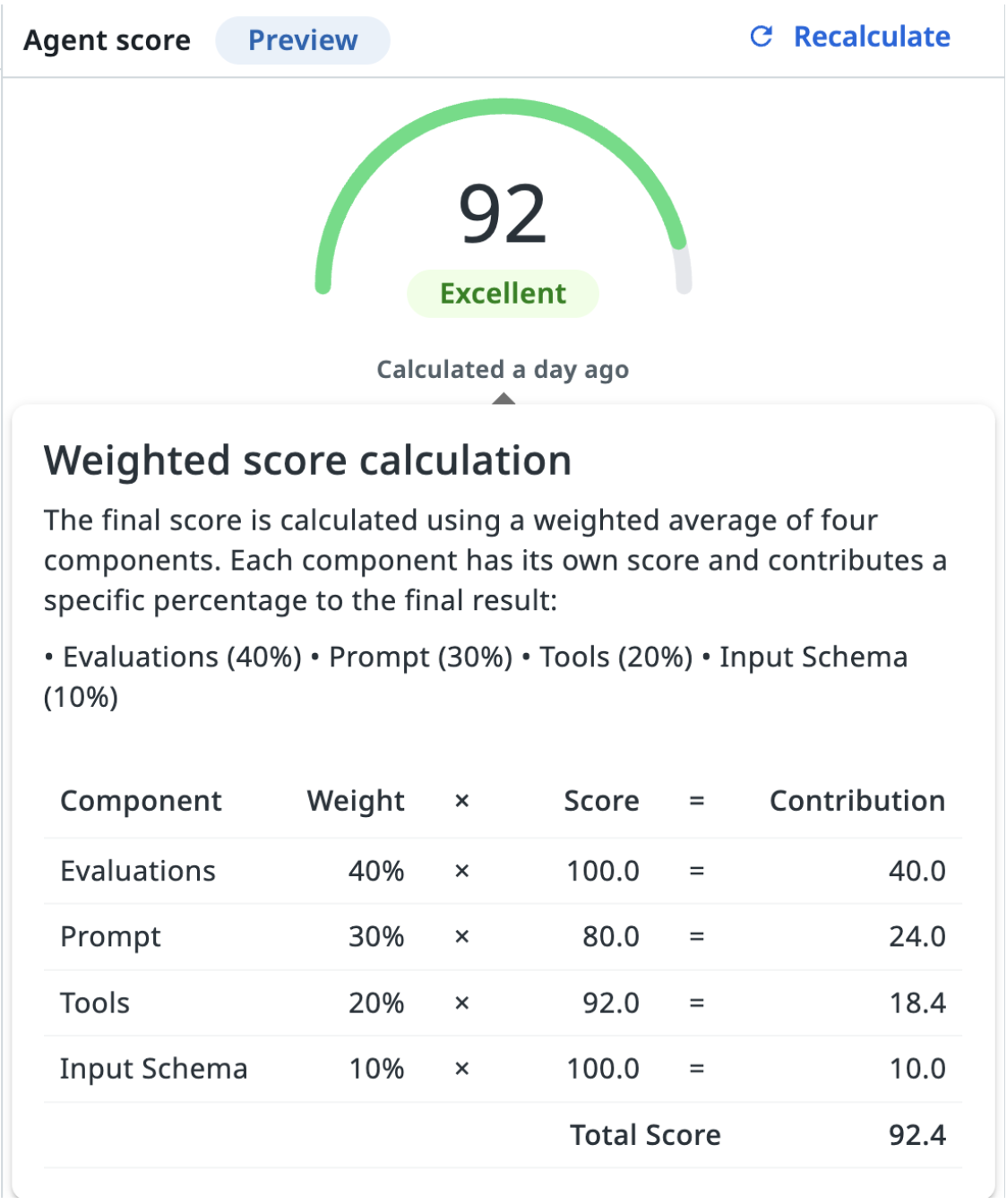

This is where the agent score from UiPath comes in. Agent score is a comprehensive measurement system that examines and weights the importance of all the different elements that make up the agent.

1. Prompt quality

The agent's instructions are evaluated for:

Clarity: how easily understood and unambiguous are the agent’s instructions?

Completeness: are all necessary details for task execution included?

Consistency: do agent instructions align with business goals without any contradictions?

Logical reasoning: does the agent follow a step-by-step thinking process?

Examples: are the reference examples that guide agent responses of high quality?

2. Tool use

Evaluates the agent's available tools and resources:

Appropriate scope: does the agent have the right number of tools? (ideally under 20)

Documentation quality: are there clear explanations of each tool's function?

Relevance: do the tools and context sources used by the agent directly support business tasks?

Escalation mechanisms: are there clear pathways for handling edge cases?

Completeness: does the agent have all the resources needed to perform well?

3. Input schema

Ensures proper configuration for business data processing:

Argument processing: are user inputs correctly handled?

Placeholder alignment: are the agent’s input parameters properly configured?

Data validation: do agent inputs meet the required specifications?

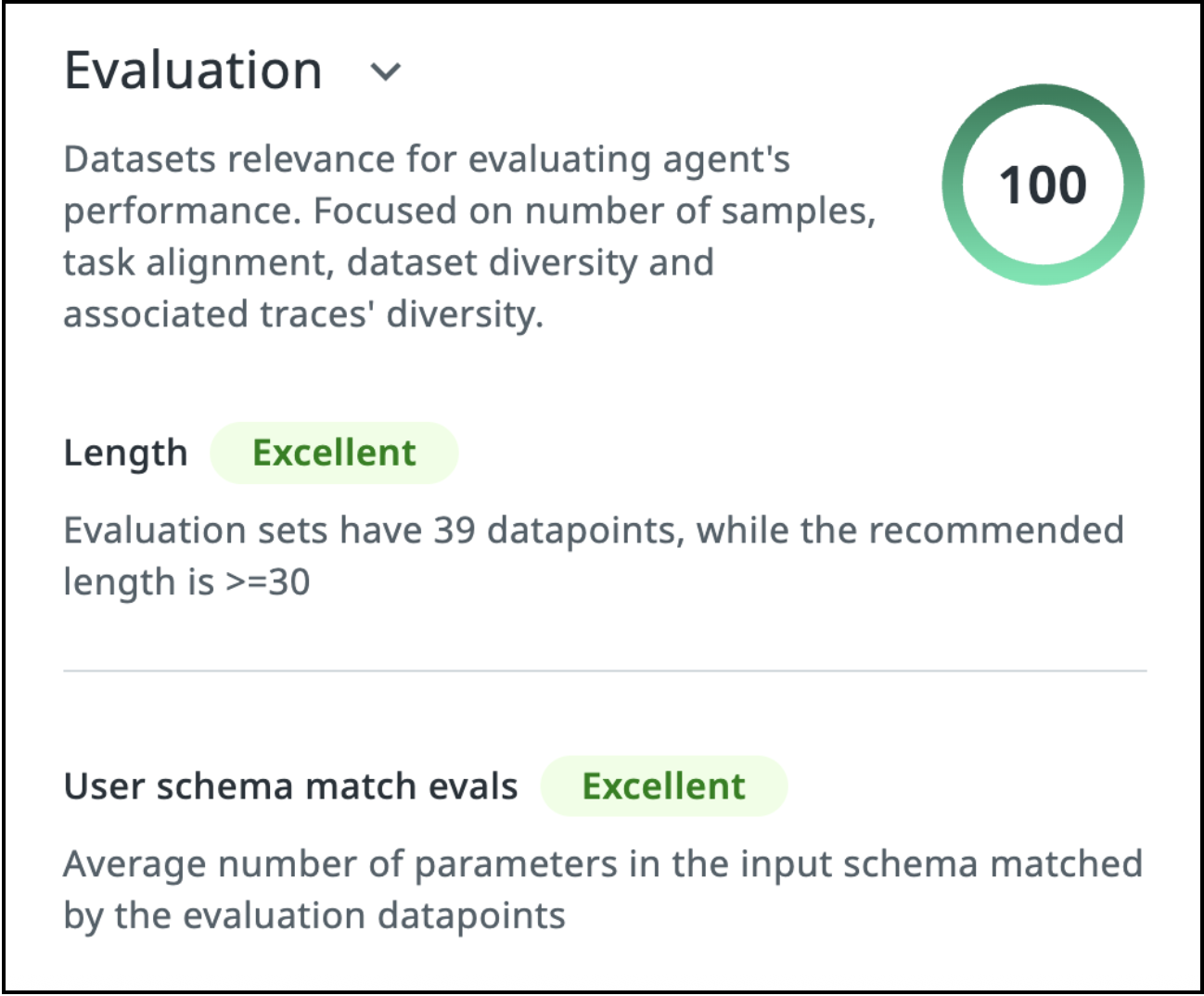

4. Evaluation diversity and performance

Assesses how thoroughly the agent has been tested:

Testing depth: the agent is run through a minimum of 30 unique test scenarios.

Test coverage: are all tools and functions properly tested?

Business relevance: do the tests align with actual business tasks?

Diversity: do the range of scenarios reflect real-world usage?

Measures actual agent performance against expectations:

Average evaluation scores: provide the overall success rate on test scenarios.

Business metrics alignment: how well does the agent meet specific business objectives?

Consistency: how reliable is the agent’s performance over multiple executions?

Agent optimizer

Evaluations and agent scoring are great, but what should you do with these results? How can you use them to make your agents better?

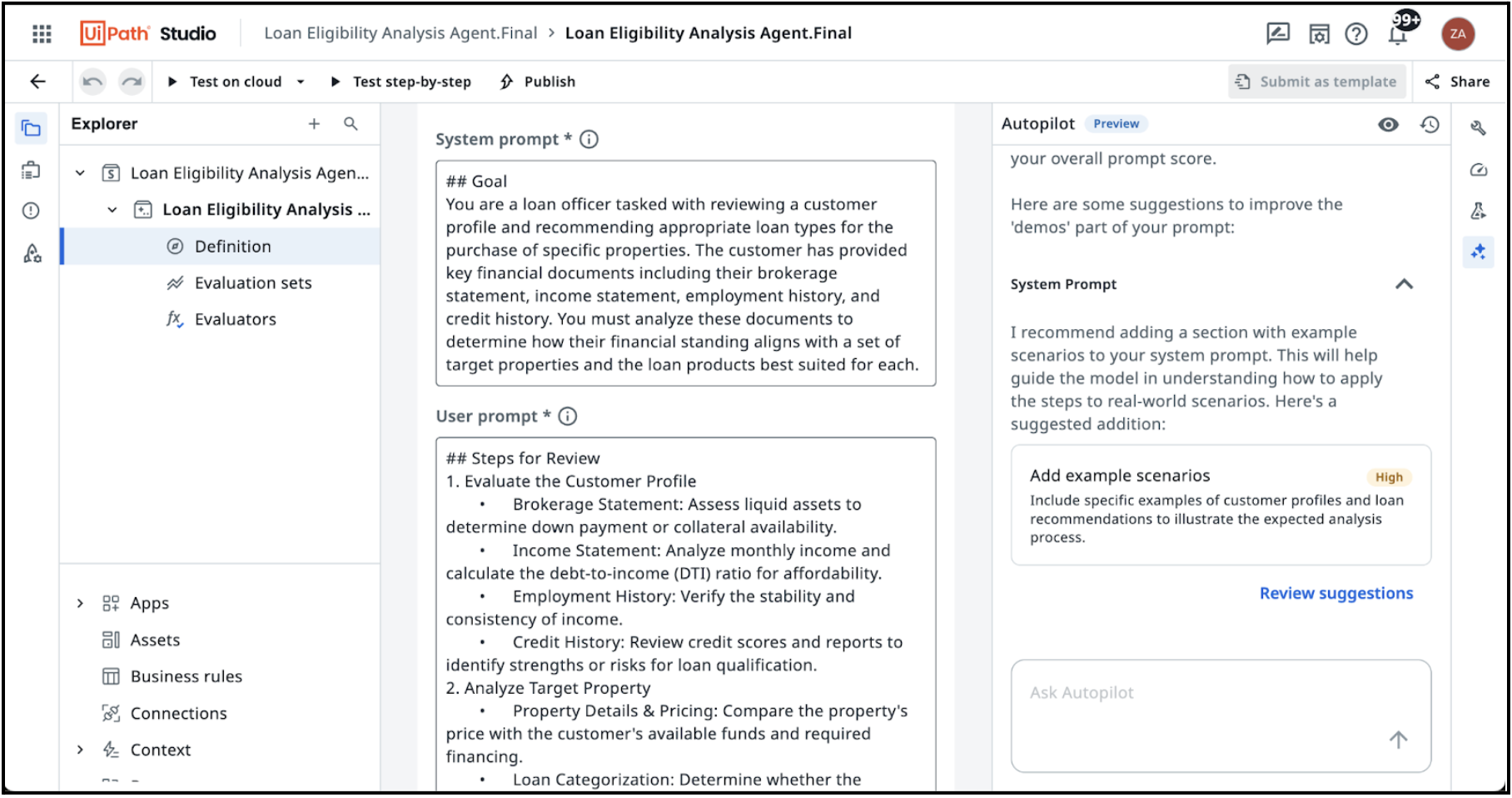

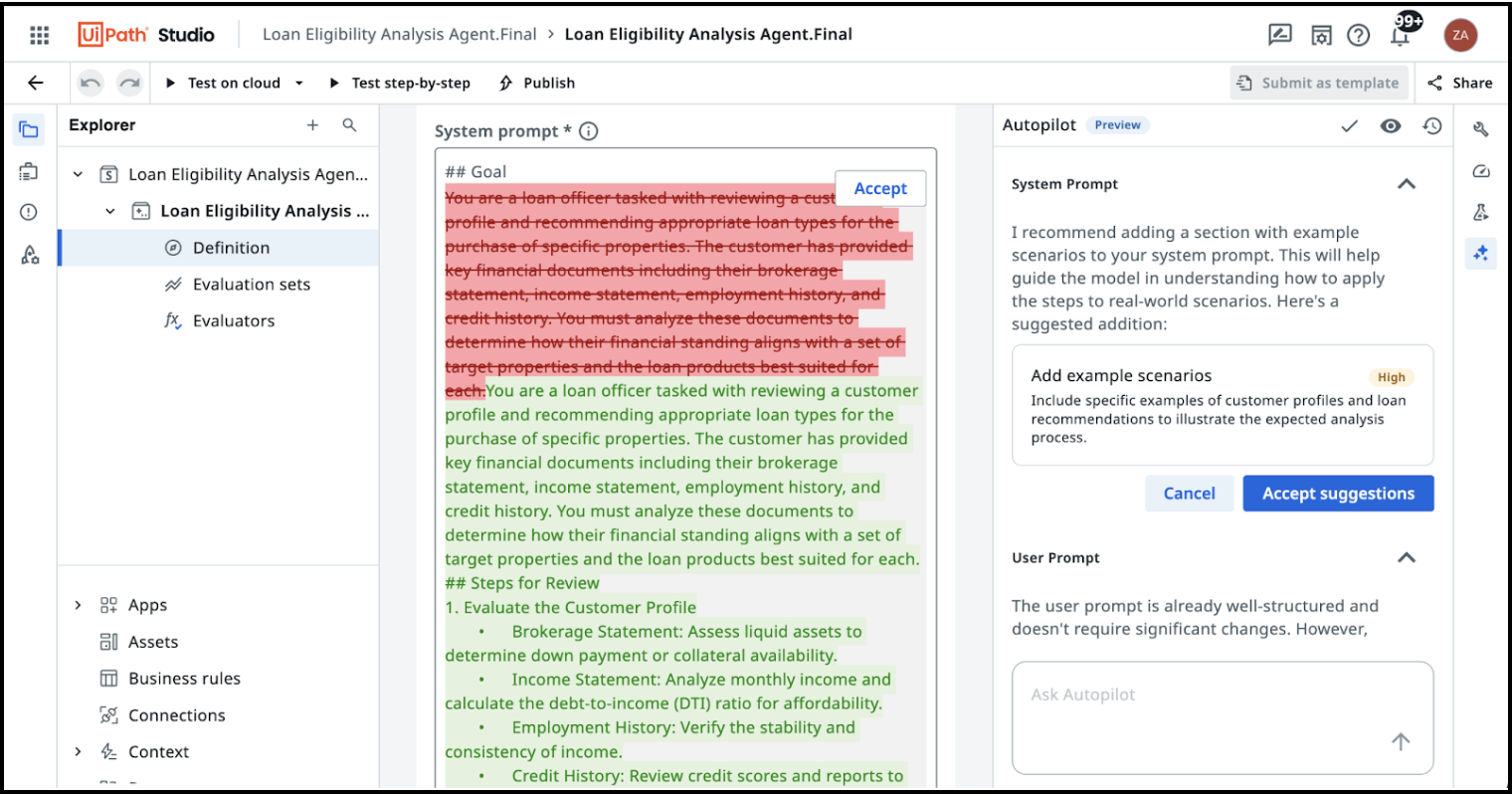

The agent optimizer from UiPath transforms agent health score metrics into specific, actionable recommendations for enhancing agent performance. It functions as an intelligent analysis system that prioritizes improvements based on impact potential, implementation effort, and alignment with business objectives.

Across all of the core attributes that the agent score from UiPath measures, the agent optimizer offers specific recommendations to improve the prompt, tool use/descriptions, input arguments, and evaluations. It can even apply these recommendations directly to your agent. These recommendations are delivered through UiPath Autopilot™ and its conversational interface:

See it in action:

Working together, the agent score, agent optimizer, and Autopilot deliver several benefits that accelerate time to value with UiPath Agents:

Efficiency gains: manual analysis time is reduced by instantly identifying the highest-priority improvements

Risk reduction: emerging performance issues are identified early on and can be fixed before they snowball into real failures

Enhanced ROI: return on AI investment is maximized through continuous performance improvement

Strategic alignment: agent performance directly supports key business objectives and adapts optimization priorities as business needs evolve

See how fast and effective it is to create, customize, deploy, and evaluate coded enterprise agents using the UiPath Platform, with no complex infrastructure needed to set up or manage:

Achieving accuracy and impact with agent evaluation

Let’s see how agent evaluation fits into the wider agentic automation approach. Here’s a demo of agentic automation in an example loan origination process. Agent score and agent optimizer help to evaluate the performance of the loan origination agent, and provide optimization suggestions that can improve it further:

UiPath customers are already embracing the agent evaluation capabilities of the UiPath Platform™. TQA, a technology services and consultancy leader, is using UiPath Agent Builder to create multiple internal agents for its sales and marketing teams. Agent scoring and evaluation have been a core part of the development team’s approach, giving them confidence in the performance and accuracy of their multiple agents.

Dillan Hacket, Innovation Director, had this to say:

“One of things which makes the UiPath Agent Builder a truly enterprise solution is its guardrails, particularly the ability to evaluate and test the agent and its results, as well as for the agent to escalate to humans during runtime where it is unsure.

GenAI can often be seen as a 'black box' where we aren't able to understand exactly what it is doing and therefore cannot truly trust its results to be correct and, importantly, consistent. If you ask other popular GenAI tools the same question over and over, you will likely get varying results, formats, etc. This inconsistency means that it cannot be used with confidence in an enterprise setting where we require defined, consistent, and trusted outputs and decisions.

The evaluation tool lets us create many different test scenarios to run through the agent and ensure its outputs are correct and consistent in all instances, giving the necessary confidence that the agent will act as expected each time, every time.”

UiPath offers a comprehensive and differentiated approach to agent scoring. With the agent score, optimizer, and Autopilot from UiPath, businesses can evaluate, measure, and enhance AI agents across their complete lifecycle. The result is performant, reliable, and trustworthy AI agents for the enterprise—agents that understand your business and are primed to meet your objectives.

Get started creating trusted enterprise agents that collaborate with robots and other AI agents using the UiPath Agent Builder free trial. To learn more about our unique approach to AI agent development and evaluation, watch our latest video demos.

Director, Product Management, UiPath

Get articles from automation experts in your inbox

SubscribeGet articles from automation experts in your inbox

Sign up today and we'll email you the newest articles every week.

Thank you for subscribing!

Thank you for subscribing! Each week, we'll send the best automation blog posts straight to your inbox.