The Literary Antecedents of the Laws of Robotics

Share at:

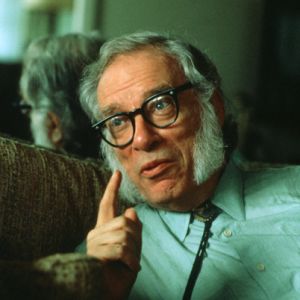

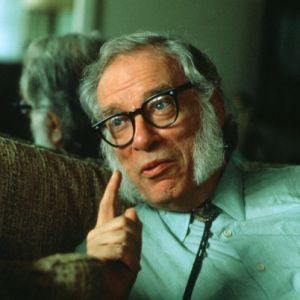

In May 1939, Isaac Asimov attended a meeting of the Queens Science Fiction Society, where he met Ernest and Otto Binder. The two had recently written a short story called "I Robot" about a sympathetic robot named Alex Link that is motivated by honor and love. The story became the first in a series of ten, and it inspired Asimov to write his own tale of a sympathetic robot.

About two weeks later, Asimov submitted "Robbie" to Astounding Science-Fiction editor Joseph W Campbell. Unfortunately, Campbell found the story too similar to Lester Del Ray's "Helen O'Loy" (1938) and rejected it. Asimov would later publish "Robbie" in Astonishing Stories in 1940. He would also remain interested in the concept of robots' capacity for ethical thought and action throughout his authorial career.

A Fruitful Collaboration

On December 23, 1940, Asimov had truly seminal conversation with Campbell. The result: the Three Laws of Robotics. Asimov credited Campbell with originating the Laws, while Campbell insisted that Asimov already had the idea, and Campbell simply helped to state them explicitly Later the two would agree that the Laws had been a result of their synergy.

Regardless of who had the idea, it was Asimov who incorporated the Laws into his fiction and popularized them. The Laws, first explicitly stated in the short story "Runaround," are as follows:

A robot may not injure a human being, or, by inaction, allow a human being to come to harm.

A robot must obey orders given by its human beings, except when such orders would conflict with the First Law.

A robot must protect its own existence, so long as that protection doesn't conflict with the First or Second Laws.

Asimov noted that he included the language about "inaction" after reading a poem called "The Last Decalogue" by Arthur Hugh Clough. The poem contains the satirical line "Thou shalt not kill, but needest not strive/officially to keep alive."

Asimov later added a fourth law, which he called the Zeroth Law because it must precede the others: A robot may not harm humanity, or, by inaction, allow humanity to come to harm. The Law is first stated explicitly in the novel Robots and Empire, although the concept originally appeared in the short story "The Evitable Conflict."

These Laws quickly grew entwined with science fiction literature. But Asimov wrote that he shouldn't receive credit for their creation because the Laws are "obvious from the start, and everyone is aware of them subliminally. The Laws just never happened to be put into brief sentences until I managed to do the job. The Laws apply, as a matter of course, to every tool that human beings use."

Time for New Laws of Robotics?

As technology has evolved, Asimov's Laws have provided a useful framework for discussing the ethical questions raised by the use of robots. It's generally accepted that the Laws are not sufficient constraints unto themselves, but they are one of the best starting points for discussions about artificial intelligence. And the basic premise that robots not harm humans helps to ensure that robots' actions are considered acceptable and appropriate by the general public.

One consideration is that robots cannot inherently contain or obey laws; their creators must program these features. Meanwhile, today even the most sophisticated robots cannot understand or apply the Laws. However, as the complexity of robots increases all the time, experts have continued to express interest in developing guidelines and safeguards for operating robots.

To address these emerging issues, Robin Murphy (Raytheon Professor of Computer Science and Engineering at Texas A & M University) and David D Woods (Director of Cognitive Systems Engineering Lab at Ohio State University) proposed three new laws, outlined in the July/August 2009 issue of IEEE Intelligent Systems:

A human may not deploy a robot without the human-robot system meeting the highest legal and professional standards of safety and ethics.

A robot must respond to humans as appropriate for their roles.

A robot must be endowed with sufficient situated autonomy to protect its own existence as long as such protection provides smooth transfer of control which does not conflict with the first two laws.

Woods noted, "Our rules are a little more realistic, and therefore, a little more boring....The philosophy has been, 'Sure, people make mistakes, but robots will be better--a perfect version of ourselves.' We wanted to write three new laws to get people thinking about the human-robot relationship in more realistic, grounded ways."

As our interactions with robots grow more intimate and complex, we'll undoubtedly venture into new ethical territory. What laws might we add in the future?

Master Content Brain, Integra Solutions

Get articles from automation experts in your inbox

SubscribeGet articles from automation experts in your inbox

Sign up today and we'll email you the newest articles every week.

Thank you for subscribing!

Thank you for subscribing! Each week, we'll send the best automation blog posts straight to your inbox.